Generative AI is transforming industries, which creates content, designs solutions, and processes complex tasks. However, this technology can risk your systems’ security, accuracy, and ethical use. Safeguard your AI investments ensures long-term functionality and trustworthiness. This article walks you through 14 best practices to protect your generative AI systems while maximizing their potential.

1. Implement Robust Security Protocols

Implement firewalls and intrusion detection systems to continuously monitor for suspicious activity. Encrypt all communication between components to prevent data interception. Secure sensitive information with advanced encryption standards (AES) and ensure only authorized personnel can access critical files.

Update your systems regularly to patch vulnerabilities. Cyber attackers constantly evolve their strategies, and outdated systems are often their primary targets. Staying on top of updates helps close loopholes that could otherwise expose your AI to unnecessary risks.

FURTHER READING: |

1. 20+ Best LangChain Alternatives for Your Projects 2025 |

2. RAG vs Fine-tuning: Which Is Better for Improving AI Models? |

3. Web Scraping with LangChain: Tutorial for Beginners |

2. Conduct Regular Audits and Red Teaming

Audits evaluate the effectiveness of your current protections and uncover areas that need improvement. These assessments should include everything from the AI model itself to its training data and APIs.

Pair audits with red teaming in generative AI, where a team simulates potential attacks to test your system’s resilience. These simulated attacks expose weaknesses you may not have considered, enabling you to address them proactively. With an “attack to defend” approach, you can prepare your systems for real-world threats while improving overall reliability.

3. Protect Training Data

The data you use to train your generative AI is one of its most critical assets. If compromised, this data can lead to skewed outputs, ethical issues, or even legal challenges. To secure training data, start by encrypting it both at rest and in transit. Use secure storage solutions that provide restricted access based on roles and responsibilities.

Moreover, consider anonymizing sensitive data to protect user privacy. Remove personally identifiable information (PII) from datasets to ensure adherence to data protection regulations while mitigating the risk of sensitive data leaks.

4. Monitor for Misuse and Bias

Generative AI creates unintended outputs, including biased or harmful content, which arise from gaps in the training data or manipulations by bad actors. Regularly monitor the outputs of your generative AI to detect and correct any misuse.

To identify biases, employ fairness metrics and conduct impact assessments. You can catch problematic patterns early and adjust accordingly. This proactive monitoring builds confidence in your AI’s reliability and ethical compliance.

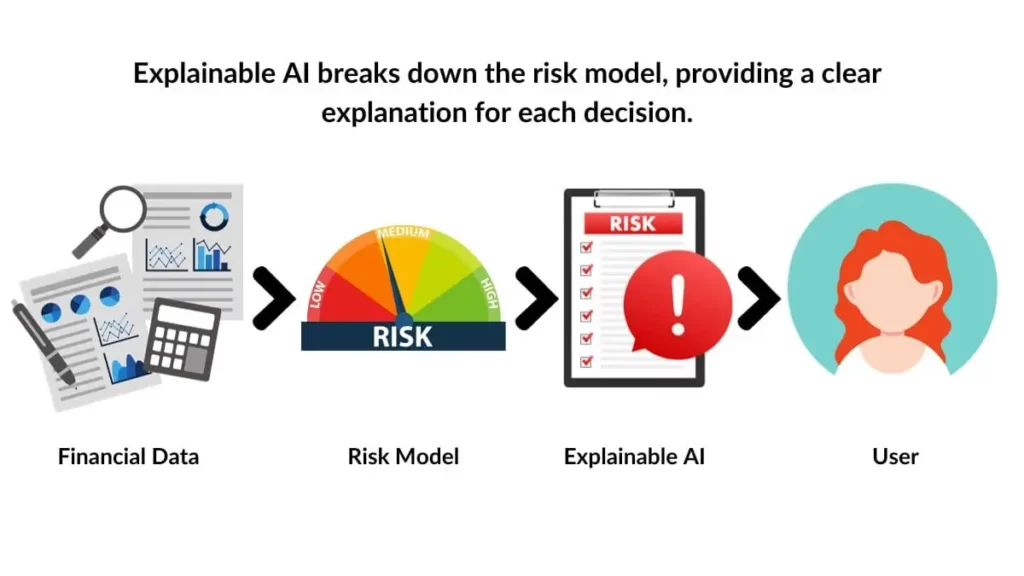

5. Use Explainable AI Tools

One of the challenges with generative AI is its “black-box” nature, where understanding the decision-making process can be difficult. Explainable AI tools solve this issue by providing insights into how the AI generates its outputs. These tools offer transparency, allowing you to track the source of errors or anomalies.

Explainability also improves your ability to detect adversarial attacks. If the system starts generating unexpected results, you can trace them back to specific inputs or vulnerabilities. This level of understanding ensures that your AI remains accountable and aligns with your objectives.

6. Secure APIs and Endpoints

Generative AI systems often integrate with other applications through APIs, which are potential entry points for attackers. To secure APIs and endpoints, implement strong authentication protocols such as OAuth 2.0 or API keys. Only authorized users and applications have permitted access to your AI systems.

Additionally, use rate-limiting mechanisms to prevent denial-of-service (DoS) attacks. Monitor API traffic for unusual patterns, such as repeated failed authentication attempts, which may indicate a breach attempt. Strengthening your API security protects your AI systems from exploitation while maintaining smooth operations.

7. Establish Ethical Guidelines

The outputs of your generative AI can have significant social and ethical implications. Establish clear ethical guidelines that define acceptable use cases for your AI. For example, restrict its use for generating harmful, misleading, or illegal content.

Embed these ethical constraints into your AI models during development. Include oversight mechanisms, such as flagging inappropriate outputs, to maintain alignment with your organization’s values. By prioritizing ethics, you not only protect your AI from misuse but also build trust with your stakeholders.

8. Stay Compliant With Regulations

Stay informed about compliance requirements like the General Data Protection Regulation (GDPR). These regulations often require stringent data protection measures that directly impact how you manage your generative AI. Conduct regular compliance checks and work with legal experts.

9. Educate Your Team

Provide training on how to detect potential threats, respond to incidents, and maintain data privacy. Encourage ongoing education by keeping your team updated on the latest cybersecurity and AI developments. It serves as your first line of defense against both external attacks and internal errors.

10. Utilize Multi-Layered Authentication

Single-factor authentication isn’t sufficient to protect sensitive systems like generative AI. Employ multi-factor authentication (MFA) that requires users to confirm their identities through two or more methods, like a one-time code and a password.

For even greater protection, consider incorporating biometric authentication, such as fingerprint or facial recognition. It lowers the risk of unauthorized access, even if login credentials are compromised.

11. Prepare an Incident Response Plan

No system is entirely immune to breaches. That’s why having a comprehensive incident response plan is essential. It should outline the steps to take if your generative AI system is compromised, including identifying the breach, containing the damage, and restoring normal operations.

Regularly test and update an incident response plan to ensure effectiveness against evolving threats. In this way, you can minimize downtime and recover quickly, maintaining the trust of your users.

12. Adopt Differential Privacy Techniques

Differential privacy techniques ensure that individual data points cannot be identified, even if an attacker gains access to the output of your model. This approach involves adding controlled noise to datasets or outputs to obscure sensitive information without compromising the overall accuracy of the AI.

Implement differential privacy methods during model training and data handling processes. These techniques help you meet regulatory requirements and build trust with users by showing your commitment to safeguarding their privacy.

13. Leverage Continuous Learning and Adaptation

Generative AI operates in a dynamic landscape where new threats and vulnerabilities emerge regularly. Relying on static security measures isn’t enough. Instead, integrate continuous learning mechanisms into your AI systems to adapt to evolving risks.

For instance, utilize machine learning models that detect anomalies in real time, enabling your AI to identify and respond to threats autonomously. Combine this with routine updates to training data, ensuring that your AI remains informed of the latest ethical, security, and operational standards. This adaptability ensures that your systems stay robust against new challenges.

14. Engage in Community Collaboration

Collaborating with the broader AI and cybersecurity community can significantly enhance your generative AI’s resilience. Participate in forums, workshops, and partnerships where organizations share best practices, tools, and insights for safeguarding AI systems.

Collaborative efforts, such as contributing to open-source security projects or engaging in AI research consortia, allow you to remain ahead of potential threats and leverage collective expertise. This proactive engagement strengthens your defenses and promotes innovation in AI security.

The Future of Generative AI Security: Emerging Trends and Innovations

As generative AI evolves, so do the threats and opportunities for securing these transformative systems. Here are a few forward-looking approaches to bolster the resilience of your AI systems:

1. Adoption of AI-Powered Security Systems

Leveraging AI to protect AI is becoming a game-changer. AI-powered cybersecurity tools analyze vast amounts of data in real time, identifying threats faster and with greater accuracy than traditional methods. These systems can detect anomalies in generative AI operations, such as unusual API requests or deviations in output patterns, and take immediate corrective actions.

2. Federated Learning for Data Privacy

Federated learning is emerging as a powerful technique to enhance data security. Instead of centralizing training data, this approach allows AI models to learn from decentralized data sources. It keeps sensitive information localized while still enabling robust model training.

3. Blockchain for Secure Data Handling

Blockchain technology is increasingly being explored to ensure the integrity of AI training data and outputs. Blockchain’s immutable ledger can verify the authenticity and origin of datasets, preventing tampering and unauthorized alterations. This approach is especially valuable for industries that rely on high levels of data security, such as healthcare and finance.

4. AI Ethics and Governance Frameworks

Organizations are starting to implement comprehensive governance frameworks to oversee AI usage. These frameworks ensure adherence to ethical guidelines and compliance with regulatory standards. By adopting transparent reporting mechanisms and establishing AI ethics boards, companies can build accountability into their AI systems.

5. Quantum-Resistant Encryption

As quantum computing develops, current encryption methods may become obsolete. Forward-thinking organizations are beginning to explore quantum-resistant encryption techniques to future-proof their generative AI systems against potential quantum attacks.

Proactively addressing emerging challenges not only secures your AI investments but also ensures ethical usage, transparency, and trustworthiness. The journey toward a secure AI future is dynamic, requiring vigilance, adaptability, and collaboration to fully harness the transformative potential of generative AI.

Bottom Line

Protecting your generative AI is a multifaceted task that requires robust security, proactive monitoring, ethical considerations, and team education. Safeguard your AI systems from threats while ensuring their reliability, transparency, and compliance. The effort you invest in protection today will pay off in the form of a resilient and trustworthy AI tomorrow.

Read more topics

You may also like