Top 10 Big Data Technologies and Tools in 2025

Delving into the world of big data technologies and tools, 2025 promises significant advancements. In fact, the demand for data analytics soared. New trends are emerging that are changing how businesses use data. Read this article from Designveloper to learn the details and examples that will shape the future of big data technologies and tools.

The Importance of Big Data Technologies and Tools

Big data technologies and tools are crucial in today’s digital landscape. By helping organizations manage and analyze massive amounts of data, they help improve business outcomes by improving decision making. The big data industry is still growing, with a market size of over $274 billion in 2022.

Specifically, there’s a single trend that is seeing generative AI being widely adopted by businesses to transform how they work. According to Gartner, organizations that effectively leverage big data technologies and tools will have a competitive edge. So, companies such as Amazon and Google leverage big data to create a personalized customer experience or to run their business effectively.

The rise of self serve data platforms is another important aspect. These platforms allow non technical users to access and analyze data without the help of IT departments. Democratizing data is essential for facilitating innovation and agility of organisations.

In addition, cloud infrastructure is important for big data management. Scaling for storing and processing large amounts of data is easier through cloud providers like AWS and Microsoft Azure. This flexibility is necessary for supporting the ever increasing amount of data generated in the wake of IoT devices and other sources.

10 Best Big Data Technologies and Tools

Big data technologies and tools have become pivotal in transforming industries. Knowing which tools are best will be helpful for every business going forward.

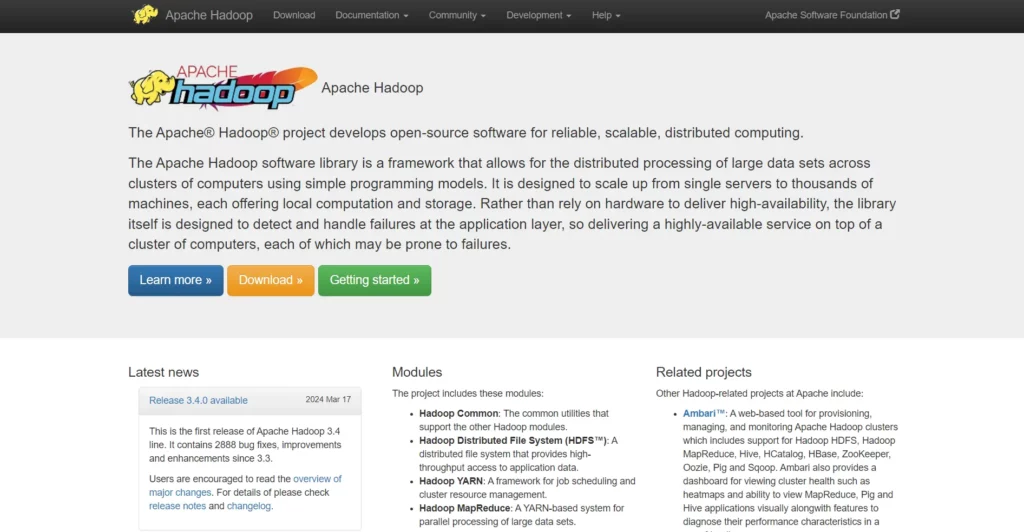

Apache Hadoop

Apache Hadoop remains a cornerstone in the world of big data technologies and tools. It is still widely popular in 2025 for its robust framework that enables distributed storage and processing of large data sets on clusters of computers. Hadoop 3.4 brought in a lot of improvements, including 2888 bug fixes and enhancements.

Hadoop’s environment comprises several important components. HDFS provides high throughput access to application data. Hadoop YARN is a job scheduling and cluster resource management framework. Finally, Hadoop Common provides utilities that support other Hadoop modules.

Hadoop is used for big data analytics by organisations from across different industries. For example, a top e-commerce company processes billions of transactions daily off Hadoop to uncover how customers behave and what they prefer on real time. This capability enables the company to make data driven decisions to improve customer experience and operations.

Pros

- Scalability: It scales easily from single servers to thousands of machines.

- Fault Tolerance: To detect and handle failures at the application layer.

- Open Source: Widely supported by a large community and free.

Cons

- Complexity: Setup can be difficult and hard to manage.

- Resource Intensive: It consumes significant computational and storage resources.

- Competition: Apache Spark and cloud based solutions are emerging technologies.

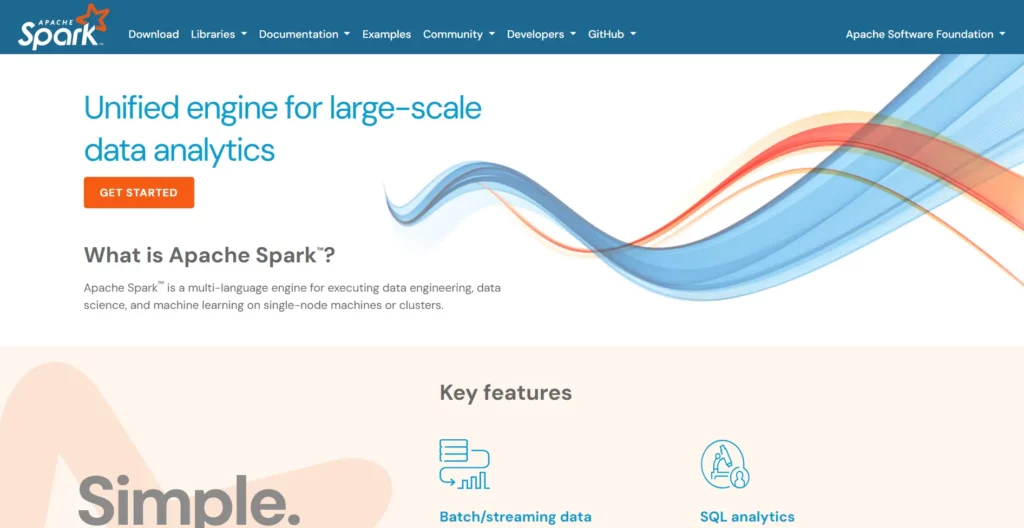

Apache Spark

Apache Spark is an open source analytics engine for large scale data processing. At the same time, their high level APIs in languages such as Scala, Java, python and R make it accessible to many more developers. Spark is known for its speed and ease of use, especially when it comes to handling big data technologies and tools.

In the year 2024, the Apache Spark went on in its evolution and the latest release was Spark 3.5.3 in September. There were several new features added to this version such as increased compatibility with Structured Streaming and distributed training and inference support. Spark 4.0 is also in development and even drives more advanced capabilities.

Real time data processing is one of the standout features of Apache Spark. As a result, it proves to be a perfect choice for use in applications where you need to quickly get insights from large data sets. Amongst other places, financial institutions favor Spark for real time analysis of transaction data to detect fraud.

Pros

- High Performance: Apache Spark is known for its fast processing speeds, making it ideal for big data technologies and tools.

- Versatility: It supports multiple programming languages, such as Scala, Java, Python, R, etc.

- Real-Time Processing: It can process real time data processing and it is important for applications that need immediate insight.

- Active Community: It’s a large and active community, constantly improving and updating.

Cons

- Complex Setup: It can be difficult to set up and configure initially, particularly if you’re new to CI/CD.

- Resource Intensive: This can be very costly for smaller organizations because it also requires a lot of computational resources.

- Learning Curve: There is a steep learning curve for those new to Spark and big data technologies and tools.

NoSQL Databases

NoSQL databases have become a cornerstone in the world of big data technologies and tools. They offer a flexible, scalable solution for managing vast amounts of both structured and unstructured data. In 2024, the global NoSQL market is projected to reach $86.3 billion by 2032, growing at an impressive annual rate of 28%. This growth is driven by the increasing demand for databases that can handle the complexities of modern applications.

One of the standout features of NoSQL databases is their ability to handle diverse data types efficiently. Unlike traditional SQL databases, which are optimized for structured data and complex transactions, NoSQL databases excel in speed and adaptability. This makes them ideal for applications like social media platforms, e-commerce websites, and real-time analytics.

Examples of popular NoSQL databases in 2024 include:

- IBM Cloudant: Known for its serverless capabilities.

- RavenDB: Offers fully-functional ACID transactions.

- Cassandra: A wide-column store that excels in scalability.

- Neo4j: A graph-based database perfect for complex relationships.

- HBase: Ideal for storing very large datasets in a column-oriented manner.

Pros

- Scalability: Easily scales to handle large volumes of data.

- Flexibility: Supports various data models, including key-value, document, column-family, and graph.

- Performance: High read/write speeds, making it suitable for real-time applications.

- Cost-effective: Often open-source, reducing costs for businesses.

Cons

- Complexity: Can be more complex to set up and manage compared to traditional SQL databases.

- Consistency: Some NoSQL databases may sacrifice consistency for performance and scalability (eventual consistency).

- Limited Transaction Support: Not all NoSQL databases support full ACID transactions.

Power BI

Power BI, developed by Microsoft, continues to be a leading tool in the realm of big data technologies and tools. By the year 2024, Power BI has added some notable new features, which also make it even more powerful when it comes to Data visualization and Business intelligence. The standout update is the integration of AI tools for root cause analyses that can solve business problems in minutes instead of hours. This is a big improvement over traditional methods such as Microsoft Excel.

The other noteworthy addition is that Power BI Desktop offers Dark Mode, the ability to customize the way you visualize your data. The Copilot chat pane also now offers automatic text-based answers and summaries across all pages in a report, making reporting easier. These updates show the motivation for Power BI in improving user experience and productivity.

Power BI is famous across industries for its robust data modeling, real time dashboards and custom visualizations. For instance, Pro Flight Grips Inc., a fictitious company, uses Power BI to analyze sales data across its three product lines: cord, plastic, and regular grips. It allows the company to make quick and effective business decisions.

Pros

- User-friendly interface: Even for non technical users, easy to navigate and use.

- Integration with Microsoft products: It integrates with other Microsoft services such as Excel and Azure.

- Real-time data updates: It provides up to the minute data for accurate decision making.

- Customizable visualizations: It provides many options for visualization, appropriate for whatever needs.

- Mobile accessibility: Available on different devices so that users can work on the go.

Cons

- Learning curve: It can be somewhat complicated for beginners to learn all the features.

- Performance issues: With very big datasets may experience slowdowns.

- Cost: For small businesses or individual users, paid plans can be very expensive.

- Limited data handling: Datasets larger than 250 MB.

- Internet-dependent: It consists of cloud based features so it needs a stable internet connection.

Data Lakes

Data lakes have become a cornerstone in the world of big data technologies and tools. Centralized repositories that store a tremendous amount of structured as well as unstructured data until needed. Because they are so flexible, they are great at handling different types of data, such as images, videos, audio files. Gartner reports that 39 percent of data and analytics leaders are investing in data lakes.

Data lakes are one of the notable features when it comes to scalability. Without running out of space, organizations can store petabytes of data. This scalability is important for businesses that generate a lot of data every day. This can range from Netflix and Amazon who use data lakes to manage and analyze data on customers to provide personalized recommendations and improve user experience across.

But data lakes aren’t simple. And it can be hard to manage and organize the data, so if you don’t have governance, you could end up with what’s called a data swamp – where the data is just not tractable, is not usable.” The solution is being looked into by companies, through investing in data governance tools and practices to ensure data quality and accessibility.

Pros

- Scalability: It can store a lot of data.

- Flexibility: It can handle structured, unstructured, semi structured data.

- Cost-effective: Lower storage cost as compared to traditional data warehouses.

Cons

- Complexity: To avoid becoming a data swamp, it requires robust data governance.

- Querying Challenges: Some of the more complex queries than traditional databases.

- Maintenance: It is something that needs continuous monitoring and managing.

Tableau

Acting as a powerful data visualization tool, Tableau allows businesses to turn raw data into actionable insights. Tableau continues to be a top choice for organizations making data driven decisions in 2024. A powerful interface and robust analytics make it easy to create interactive and shareable dashboards.

Tableau’s adoption has seen a surge, as reported, exceeding 80,000 organizations using it around the world. Being able to run and analyze huge datasets in real time has made it an essential tool for countless businesses.

Take the case of a retail company where Tableau was used to analyze customer purchase patterns and optimize inventory management. The company looked at the sales data and visualized what was happening in the market on a daily, weekly, and monthly basis to help them make informed decisions, because with that, they had a 15% revenue increase.

Pros

- User-friendly interface

- Enhanced data visualization capabilities

- Real-time data analysis

- Supports large datasets

Cons

- It can be expensive for small businesses.

- It takes some training to use it effectively.

- Less customization options than other tools

Qlik

Qlik is a powerful tool in the world of big data technologies and tools. It is a full suite of solutions for data integration, data quality, and analytics. Qlik’s platform is a popular choice for businesses of all sizes because it is designed to help organizations transform their data into valuable insights.

Qlik is one of the few tools that can offer real time data analysis. It allows businesses to make smart data based decisions relatively fast and fast. In addition, Qlik’s user-friendly interface provides the easiest form of exploration, with its full blown interactive visualization and dashboard creation which helps one in understanding complex data sets easily.

Gartner has named Qlik as a leader in the 2024 Gartner Magic Quadrant for Analytics and Business Intelligence Platforms. This accolade highlights Qlik’s strong performance and reliability in the field of big data technologies and tools. Today Qlik has over 40,000 global customers.

Pros

- Real-time data analysis

- User-friendly interface

- Interactive visualizations and dashboards

- Gartner recognized as a leader

- Over 40,000 customers worldwide trusted us

Cons

- Can be complex for beginners

- More expensive than some competitors

Google BigQuery

In 2024, Google BigQuery remains a top big data technology. It is a fully managed, serverless data warehouse that allows super fast SQL queries using the processing power of Google’s infrastructure. The reason behind the popularity of BigQuery is it can admit both structured and unstructured data, and therefore it is useful for different kinds of data analytics.

Also in 2024, Google released Gemini in BigQuery—their AI empowered data preparation, analysis, and engineering tool. Intelligent recommendations to improve user productivity and optimize workflows are offered by Gemini. This advancement has only made BigQuery even more attractive to data professionals who want to simplify their data processes.

Open table formats like Apache Iceberg, Delta, and Hudi are supported, and streaming supports continuous data ingestion and analysis. The scalable, distributed analysis engine on the platform offers queries of terabytes in seconds and petabytes in minutes. It is therefore an ideal choice for data bound organizations, with large amounts of data.

Pros

- Fully managed: No infrastructure management required.

- High performance: Queries terabytes in seconds.

- Versatile: It supports structured as well as unstructured data.

- AI-powered: Gemini feature increases productivity.

- Open table formats: It supports Apache Iceberg, Delta, and Hudi.

Cons

- Cost: It can be expensive for smaller organizations.

- Complexity: It requires some learning curve for the new users.

- Vendor lock-in: Work together tightly with Google Cloud services.

R and Python

R and Python are two of the most popular programming languages used in big data technologies and tools. Nobody language is better for everything and both languages have their own value in data analysis, visualization and machine learning.

Python will remain the king of data science in 2024. This is due to its simplicity, versatility and the great libraries such as Pandas, NumPy and Matplotlib. Conversely, R still retains much of its popularity, particularly among academia and research, where it shines as a statistical analysis and data visualization tool.

The industries such as finance, healthcare and e-commerce are widely using Python for predictive analytics and customer segmentation type of tasks. For example, Netflix and Spotify use Python to figure out how to recommend content based on user behavior. In contrast, where change is needed to perform statistical modeling and bioinformatics, R is best used in fields like pharmaceuticals and genomics.

Pros

- Python: Extensive libraries, easy to learn, strong community support, cross platform compatibility.

- R: Focused on statistical analysis, good tools for visualization, common among academia and research.

Cons

- Python: Slower generally for some statistical computations, but faster for operating on dataframes.

- R: Less versatile for general purpose programming requiring less and steeper learning curve.

Predictive and Prescriptive Analytics Tools

Predictive and prescriptive analytics tools such as IBM Watson and SAP Analytics Cloud are essential for businesses looking to leverage big data technologies and tools. This is all to help organizations forecast what’s going to happen, and make data driven decisions. Historical data to forecast future outcomes: The predictive analytics, and that goes a step further by suggesting actions to achieve desired outcomes: Prescriptive analytics.

The market for predictive and prescriptive analytics tools has grown significantly in 2024. That’s a $18.3 billion market forecasted by Gartner for global predictive analytics software in 2025, a rate of growth of CAGR of 21.6% from 2023. The power of growth largely comes from the use of AI and Machine Learning in a lot of industries.

Pros

- Enhanced Decision-Making: By giving actionable insights, these tools give businesses opportunities to make informed decisions.

- Improved Efficiency: It saves time for businesses and saves resources by automating the data analysis.

- Scalability: These tools can handle large datasets and can scale with growth of business needs.

Cons

- High Initial Cost: But these tools can also be expensive to implement, particularly for small businesses.

- Complexity: As some tools require a steep learning curve and have to operate with skilled personnel, many find them quite difficult to learn.

- Data Privacy Concerns: The size of data also greatly concerns the privacy and security of this data.

How Designveloper is Using Big Data Technologies and Tools

At Designveloper, we pride ourselves on leveraging the latest big data technologies and tools to deliver actionable insights and drive business growth for our clients. Let’s take a closer look at how we’re using these technologies to keep up with the times.

Business Analytics for Enhanced Business Processes

The Business Analysts (BAs) on our team are focused on understanding and solving our clients’ business needs. We go deep into finding pain points and areas where technological solutions can make a huge difference in efficiency and profitability. For instance, in a maritime logistics project, our BAs collaborated with clients to simplify the user experience of a customer order management system. It involved creating detailed process diagrams and drafting business requirements to make sure the system was meeting user needs.

Informed Decisions with Advanced Data Analysis

Data Analysts (DAs) are our experts in using big data to generate insights that help make strategic decisions. Our DAs use tools like Superset to collect, process, and analyze data to find patterns and trends. For example, in the same maritime logistics project, our DAs studied the usage patterns of search bars and filters in the customer order management system. Using data, they found that only 1% of users were using these features, and so they redesigned to increase user engagement and search accuracy.

Conclusion

To stay ahead in this competitive environment, businesses must continuously evaluate and adopt the latest big data technologies and tools. So they can maximize the potential of the data to help improve operational efficiency, to strengthen customer experience, and ultimately to grow the bottom line.