What is NLP? Definition, Core Techniques & Benefits

Have you ever wondered how computers can understand and interpret human language easily? It’s all thanks to NLP, which is short for Natural Language Processing. As a powerful subfield of computer science and artificial intelligence (AI), NLP stands behind the success of chatbots, voice assistants, etc. So, what is NLP exactly and how does it work? You’ll find the answer in today’s article! Here, we’ll elaborate on NLP essentials, from its definition, core components, and key techniques to benefits and challenges for your business. Ready? Let’s dive in!

Understanding NLP (Natural Language Processing)

What is NLP?

Natural Language Processing (NLP) is an AI branch that allows computers and machines to understand, interpret, and create content using human language. It combines core components of linguistics, data science, and machine learning to handle vast amounts of text or speech data. These elements include:

Syntax: This involves understanding how words are arranged to create grammatically correct sentences. For instance, in English, we often follow a subject-verb-object order, like “The squirrel eats nuts.” NLP, accordingly, uses this syntax to analyze sentence structure. This helps NLP parse sentences precisely for further processing.

Semantics: This involves interpreting what words and sentences mean. Based on the syntactic outcomes above, NLP models can understand not only the individual meaning of each word but also how that meaning changes within different sentences. For example, the word “park” could refer to a public area or the act of leaving a vehicle in a certain place, depending on the sentence.

These components empower power applications like chatbots to read and respond to human requests accurately and insightfully.

Besides, more intelligent NLP systems (using machine learning and deep learning, particularly) leverage pragmatics to explain how meaning shifts as per context, intent, and culture.

Like if someone says, “Can you pass the salt?” pragmatics helps NLP know whether this is a polite request or a literal question about ability. This component allows chatbots and other applications to process more complex language tasks, like recognizing sentiment in conversations or providing context-aware replies.

Traditional vs Modern Approaches to NLP

When it comes to NLP, we often think of smart machines that can emulate the human brain to handle natural language tasks. But in fact, not all NLP systems appear as “intelligent” as we expected. Since the term NLP was coined in the 1950s by Alan Turing, we’ve witnessed three primary NLP techniques. They include:

Symbolic (Rule-Based) NLP

The first approach to NLP models was symbolic.

It uses manually created rules and logical structures to help computers process language. As such, machines can follow pre-defined instructions (e.g., grammar rules) to discern sentence structures or particular sequences of words without truly understanding the language itself.

This approach can help computers process simple tasks, yet struggle with complex sentence structures, slang, or ambiguity.

Statistical NLP

It wasn’t until the late 1980s that many NLP models started to embrace statistical approaches, especially for machine translation. This revolution was attributed to the coming of machine learning algorithms in language processing. These algorithms mainly use patterns they’ve seen before to help machines understand the most likely meaning of words or sentences.

To do so, they first break down text or voice data into small pieces (e.g., individual words) and try to understand what kind of words they are (e.g., nouns or verbs). They then give a probability to different meanings of a word or phrase.

For instance, if it sees the word “park,” it’ll examine different meanings and calculate the likelihood of each meaning based on the surrounding context. If the word “car” appears nearby, the probability of the meaning “to leave a vehicle” can be higher.

Despite these benefits, statistical NLP still requires manual setup for various particular rules and categories, like classifying each word as a noun or verb. This job takes a lot of time and thorough planning, yet it’s not always accurate.

Modern NLP

To overcome the drawbacks of rule-based and statistical NLP, neural networks have been introduced since 2015 and marked the new era of modern NLP, also known as deep learning NLP. These networks can automatically understand the meaning and relationships of words without much human intervention or detailed rules.

One typical example is neural machine translation used in Google Translate. It uses sequence-to-sequence transformations to understand and interpret the flow of entire sentences without the need to match up each word across languages. This also speeds up transactions and makes them more correct.

Neural networks in NLP have become increasingly crucial in healthcare. They help analyze text and notes in EHRs (Electronic Health Records) to enhance patient care while ensuring data privacy.

Further, other modern NLP tasks like text summarization or sentiment analysis are also processed by advanced neural networks called transformers or LLMs (Large Language Models). Such models as BERT or GPT can look at entire sentences instead of analyzing each word individually to understand the context deeply and generate human-like content. This helps achieve higher accuracy and a more nuanced language understanding.

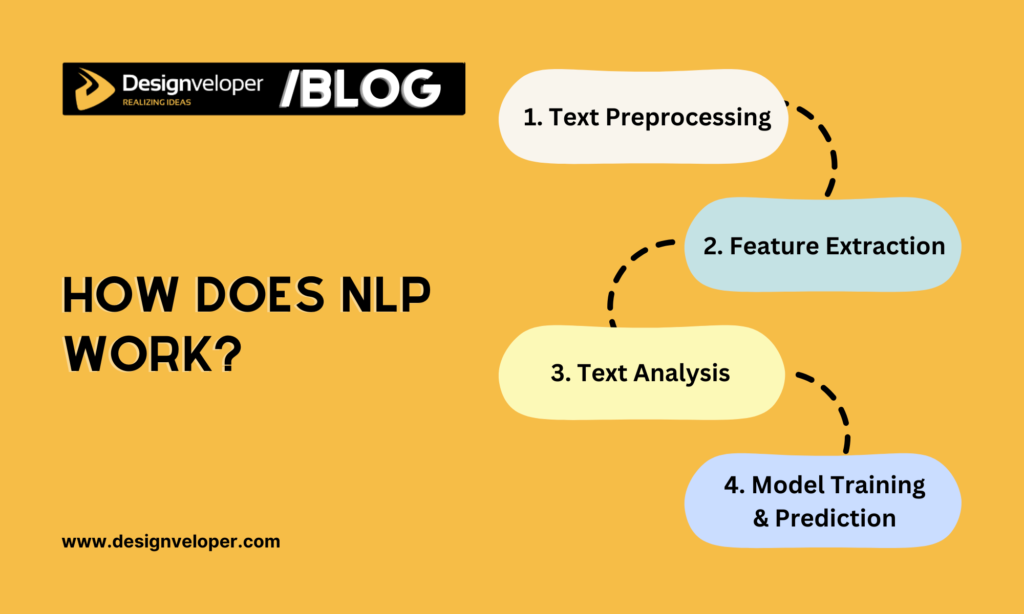

How Does NLP Work?

NLP works by using computational methods and linguistic insights, combined with machine learning and deep learning if possible. Below is a detailed breakdown of how NLP works:

Step 1: Text Preprocessing

Before NLP can analyze language data, the text needs to be cleaned and standardized into a format that the model can interpret effectively. This phase includes the following steps:

- Tokenization: Break down complex text into smaller pieces (“tokens”) like individual words, phrases, or sentences.

- Lowercasing: Convert all text into lowercase so that machines can recognize words and their meanings, no matter how they’re written. Accordingly, the words “Park,” “PARK,” and “park” are treated the same.

- Stop Words Removal: Remove common words that don’t give significant meaning to the text, like “the,” “of,” or “is.”

- Stemming and Lemmatization: Converts all words into their base form (like “driving” to “drive” or “cars” to “car”). This allows the NLP model to group different forms of the same word and remove unnecessary elements (e.g., punctuation) that can mess up the analysis. Accordingly, the model can analyze language more easily.

Step 2: Feature Extraction

This phase refers to transforming text data into numerical, structured formats because machines excel at handling numbers and implementing mathematical operations. NLP often extracts features leveraging different techniques as follows:

- Bag of Words (BoW): This method splits a sentence or document into individual words and then counts how often these words appear.

- Term Frequency-Inverse Document Frequency (TF-IDF): This technique evaluates how important a word is by looking at how often that word appears in a certain text and how rare that word is across various documents.

- Word Embeddings: Such advanced techniques as Word2Vec or GloVe represent words as numbers, also known as vectors. Accordingly, words with similar meanings will have similar numbers; for example, the vectors of the words “car” and “motorbike” are close to each other while those for “car” and “sky” are farther apart. This helps machines better understand the meaning of these words.

Step 3: Text Analysis

This phase uses different computational techniques to analyze and understand text data for meaningful insights. There are several types of analysis, primarily revolving around:

Syntactic Analysis: This involves analyzing the structure of sentences to interpret grammatical rules and relationships between words. Syntactic analysis can leverage part-of-speech (POS) tagging to determine the grammatical categories of each word (e.g., noun or adjective).

It also uses dependency parsing to map relationships between words (e.g., “the squirrel” is the subject of the verb “eats”) or constituency parsing to understand how words combine to create larger parts of a sentence by building a syntax tree that clusters words into phrases (e.g., noun phrases).

Semantic Analysis: This then uses the syntactic outcomes to interpret the meaning of words and sentences in context. This type of analysis utilizes some key techniques like named entity recognition (NER) to determine and classify entities (e.g., names or locations) in text. It also uses word sense disambiguation to identify the meaning of a word used in a specific context while sentiment analysis detects the emotional tone or opinion within the text.

Pragmatic and Contextual Analysis: NLP models can conduct this type of analysis to understand the context and intent behind the text. They leverage coreference resolution to detect which pronouns (e.g., “he” or “she”) refer to the nouns they represent. Further, contextual embeddings are also used to understand the nuanced meanings of individual words (like “park”) depending on context.

Step 4: Model Training & Prediction

Once being processed, the data, coupled with machine learning algorithms, will be used to train NLP systems. The common goal of this training process is to help these NLP models recognize patterns and relationships within the data.

During training, NLP systems can modify their settings or parameters to minimize mistakes and increase their accuracy. When training is done, the systems can perform different language tasks, depending on the types of ML algorithms used and datasets trained.

For example, supervised learning algorithms train NLP with labeled data for spam email detection. Meanwhile, unsupervised learning can work with unlabeled data to automatically discover hidden patterns. Therefore, it’s widely applied in clustering unlabeled topics among thousands of documents or articles to identify common themes.

Today, various current models like GPT are already trained with vast datasets to perform a wide range of language tasks. Instead of training a new model from scratch, you can refine these pre-trained models with task-specific data or connect them with your existing applications.

Regardless of your choice, constantly testing and evaluating your NLP model’s performance are crucial to ensure they always work well in real-world scenarios.

Pros & Cons of NLP

The introduction of Natural Language Processing brings various immense benefits to companies of all kinds. However, it also comes with several unexpected challenges that can stop your business from making full use of NLP. Let’s take a look at its pros and cons in this section and how to overcome these drawbacks:

Benefits

NLP can make a big difference for your business in many ways:

Automate Language-Related Tasks

NLP can help humans automate much manual, repetitive work like resolving customer inquiries or organizing emails with high accuracy. This helps them focus on strategic initiatives and save much time on various tasks.

Enhance Data-Driven Insights

NLP models can analyze massive datasets. In other words, they can sift through articles, social media posts, reviews, and more to help your business better understand what your customers really want or feel. This supports more informed decision-making.

Generate Human Language Content

By understanding and processing user prompts, NLP-powered language models like GPT-4 or Gemini can suggest ideas, draft outlines, and even create articles. These models can even adjust the tone, style, and context of the generated content to fit user requests. This not only speeds up the content creation process but also ensures content quality.

Improve Search Functionality

NLP empowers search engines by helping them understand queries better. As such, it can analyze the meaning of search terms and identify the intent behind them accurately even when queries appear vague or complex. This makes it easier to return results that contextually fit what searchers need.

Challenges

Despite these benefits, NLP also presents some limitations. Understanding these is necessary to help your business find ways to work best with NLP systems.

Data Issues

NLP models rely heavily on datasets. So, it’s no wonder that data issues are always the top limitation of NLP systems. One of the biggest data problems is data privacy. Many users are concerned about how companies collect, store, and utilize their information, therefore hesitating to provide personal and sensitive information. Data scarcity or bias is another serious issue. If a language lacks sufficient high-quality training data or contains biases, this can limit the development of effective NLP systems.

Solution: To resolve data privacy and security, you should use techniques like data anonymization or encryption. These techniques can protect sensitive data while still collecting precious information for NLP models. Further, you may utilize federated learning which enables NLP to learn from decentralized data sources. This approach keeps data local and secure, hence mitigating privacy concerns.

For data scarcity, leveraging pre-trained language models can help your NLP model improve its performance in low-resource languages or specialized fields. Meanwhile, data bias can be handled by synthetic data generation. This involves producing artificial data that imitates the statistical patterns and features of real-world data to top up existing databases. Besides, regularly auditing and updating training data is crucial to reduce bias and ensure fairness.

Contextual & Cultural Understanding

Language often contains contextual and cultural nuances that make it difficult for NLP models to understand. This can be attributed to regional expressions, generational slang, sarcasm, and more. For example, if someone says, “That’s just great!” in a sarcastic tone, NLP can mark it as positive while this sentence expresses a negative emotion. This can result in misinterpretations, especially in emotionally charged content.

Solution: You should incorporate context-aware algorithms (e.g., transformers with attention mechanisms) to help NLP models analyze the surrounding words and understand contextual nuances better. Further, you can combine sentiment analysis with training datasets that focus on emotional and cultural context. This allows NLP models to identify different tones easily and interpret contextual language better.

Dialects & Accents

NLP models don’t just process written formats. With a 14.24% annual growth of speech recognition from 2024 to 2030, spoken input is estimated to become increasingly common in NLP systems. However, if voice data has mispronunciations, too much slang, incorrect grammar, obscure dialects, and more, NLP has difficulty capturing what speakers are exactly conveying. This will impact the accuracy of further data analytics.

Solution: You should train NLP models with vast data sources that include diverse regional variations, speech patterns, and accents. Additionally, you may employ dialect-specific models and pronunciation adaptation models to process spoken dialects. You can also use voice improvement techniques to filter out background noise and ensure speech clarity for voice input.

Don’t forget to periodically update and retrain NLP models with the latest data that includes new vocabulary, slang, and other language trends. Use feedback loops if possible to let human reviewers frequently assess model outputs. This enables the models to learn from mistakes and fine-tune their responses over time.

Future of NLP and Emerging Trends

In 2023, natural language processing recorded a global value of $24.1 billion. One research predicted that this figure will continue to increase by 23.2% annually from 2024 to 2032. The market is accordingly dominated by NLP applications like virtual assistants & chatbots, speech recognition, text analytics, and sentiment analysis. Along with this growth is the rising adoption of advanced technologies that may shape the future of NLP:

- Conversational AI: This type of AI, which mimics human conversations, has become increasingly popular in customer support. Powered by NLP technologies, it can interpret and respond to human language inquiries easily. This leads to effective interactions with customers, personalized customer experiences, and higher engagement.

- Interactive Voice Response (IVR) and Optical Character Recognition (OCR): IVR systems are changing how businesses operate. IVR – an automated phone system technology – can communicate with a caller, direct calls to the right person, and gather information based on the caller’s voice commands and responses. Meanwhile, OCR can retrieve text from camera images, PDFs, and scanned documents for other NLP applications like language translation.

- Cloud & AI Advancements: Companies of all kinds choose cloud-based NLP solutions to minimize overall costs and time for data gathering and processing while ensuring scalability. Plus, AI advancements like deep learning techniques have greatly enhanced the performance of NLP systems by empowering them to complete tasks like content generation or language translation with higher accuracy.

How Designveloper Helps Apply NLP to Your Business

NLP has a wide application across industries. In medicine, for example, it can help healthcare professionals analyze data from electronic health records or medical research to detect health conditions early and tailor patient care. Meanwhile, NLP can empower chatbots to handle common queries 24/7 and route unsolved problems to the right human agents.

With NLP’s high applications and immense benefits, the demand for customized NLP app development is accordingly increasing. If you want to find a reliable partner to help you realize NLP ideas, Designveloper is a good option!

With over a decade of experience offering software and AI development services, we’ve tailored cutting-edge, scalable solutions that meet our clientele’s unique business needs. One of our outstanding projects is Song Nhi – a virtual financial assistant. This bot uses advanced tech like natural language processing or optical character recognition to help users manage their money ins and outs with ease.

Here at Designveloper, we provide the following AI development services:

- AI Software Development: Our experts leverage the latest AI advancements to craft robust and scalable software applications that drive efficiency and propel your business growth.

- Generative AI: We harness the power of advanced algorithms to develop innovative and dynamic solutions for your business requirements. With our expertise, we unlock the potential of GenAI to create inspiring content, designs, and solutions.

- AI Chatbot Integration: We transform your customer experience by seamlessly integrating top-notch AI chatbots into your existing platforms. No more repetitive tasks that can slow down your communication processes.

Besides cutting-edge technologies, we adopt Agile methodologies for NLP app development. Such Agile frameworks as SCRUM and Kanban allow us to focus on the most critical features of your app, ensuring timely delivery to market and within budget. So if you want to further discuss your NLP idea, contact us now!